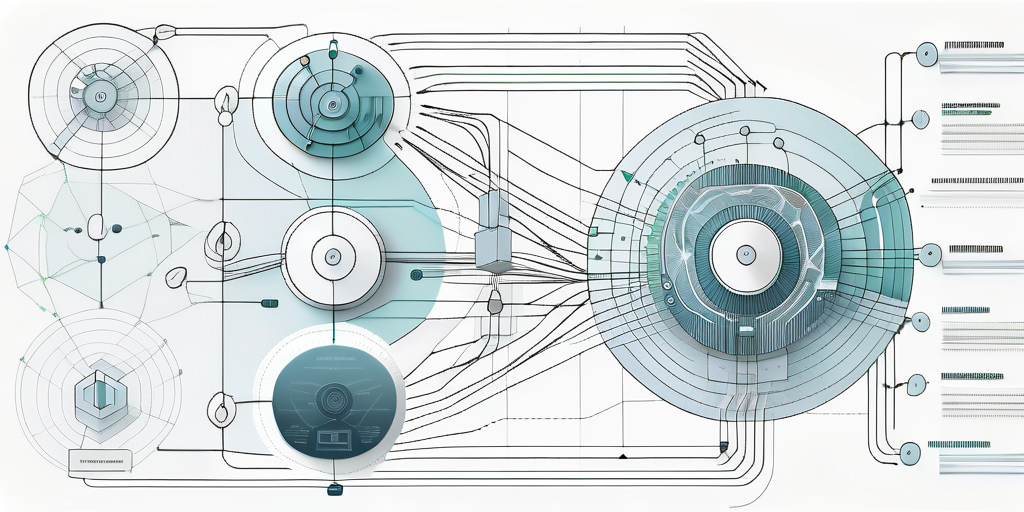

In the world of machine learning and artificial intelligence, the Encoder-Decoder architecture plays a pivotal role, especially in the realm of Large Language Models (LLMs) such as GPT-3. This architecture is a key component in the functioning of these models, enabling them to understand and generate human-like text. In this comprehensive glossary entry, we will delve deep into the intricacies of the Encoder-Decoder architecture and its application in LLMs.

The Encoder-Decoder architecture is a type of model structure used in deep learning for converting one type of data into another. It’s a two-part system where the encoder processes the input data and the decoder takes the output of the encoder to produce the final output. This architecture is widely used in tasks that involve sequential data such as text, time series (e.g., stock prices), and more.

Understanding the Encoder-Decoder Architecture

The Encoder-Decoder architecture is a two-step process involving an encoder and a decoder. The encoder takes in the input data and converts it into ame compact representation, often referred to as the context vector. This context vector is then passed to the decoder, which uses this information to produce the final output.

The encoder and decoder are typically implemented as neural networks, with the encoder capturing the input data’s salient features and the decoder using these features to generate the output. The exact structure of these networks can vary depending on the specific task at hand.

The Role of the Encoder

The encoder’s role in the Encoder-Decoder architecture is to interpret the input data and compress it into a context vector. This involves extracting the important features from the input data and discarding the irrelevant ones. The encoder accomplishes this through a series of transformations, each one designed to refine the representation of the input data.

For instance, in the case of text data, the encoder might start by converting each word in the input sentence into a dense vector using a technique like word embedding. It then processes these word vectors sequentially, updating its internal state after each word. The final state of the encoder is the context vector, which encapsulates the meaning of the entire input sentence.

The Role of the Decoder

The decoder takes the context vector produced by the encoder and generates the output data. It does this by progressively building up the output in a step-by-step manner. At each step, the decoder generates one part of the output and updates its internal state. The updated state is then used to generate the next part of the output, and so on.

In the case of text data, the decoder might start by generating the first word of the output sentence. It then uses the context vector and the first word to generate the second word, and so on, until it has generated the entire output sentence. The decoder’s ability to generate coherent and meaningful output from the context vector is a testament to the power of the Encoder-Decoder architecture.

You may also like 📖

- AI for Artists: How to Use ChatGPT to Write Songs and Monetize Your Music

- Earn with ChatGPT-Generated Fiction on Kindle Direct Publishing

- ChatGPT: Your Secret Weapon for Winning Writing Contests and Landing Lucrative Prizes

- ChatGPT for Real Estate: How to Write Property Descriptions that Sell and Make Big Bucks

- How to Use ChatGPT to Start a Profitable Review Blog

- Navigating Market Saturation: A Guide for Aspiring Online Entrepreneurs

Encoder-Decoder Architecture in Large Language Models

The Encoder-Decoder architecture is a fundamental component of many Large Language Models (LLMs). These models, which include the likes of GPT-3, use this architecture to understand and generate text that is remarkably human-like in its quality.

LLMs typically use a variant of the Encoder-Decoder architecture known as the Transformer architecture. This architecture uses self-attention mechanisms to capture the dependencies between different parts of the input data, allowing the model to understand the context in which words are used and generate appropriate output.

Transformers and Self-Attention

The Transformer architecture, introduced in the seminal paper “Attention is All You Need” by Vaswani et al., is a type of Encoder-Decoder architecture that uses self-attention mechanisms to capture the dependencies between different parts of the input data. This allows the model to understand the context in which words are used and generate appropriate output.

Self-attention, also known as scaled dot-product attention, allows the model to weigh the importance of different words in the input when generating the output. For instance, when generating the next word in a sentence, the model can use self-attention to focus on the words that are most relevant to the current context.

Application in GPT-3

GPT-3, one of the most advanced LLMs currently in existence, uses the Transformer architecture to generate text. The model takes in a sequence of words as input and generates a sequence of words as output, with each output word depending on all the previous words in the input.

The model uses self-attention to understand the context of each word in the input sequence, allowing it to generate output that is contextually appropriate. This is what enables GPT-3 to generate text that is remarkably human-like in its quality, even when dealing with complex topics or nuanced language.

Challenges and Limitations

While the Encoder-Decoder architecture, and by extension the Transformer architecture, has proven incredibly effective in tasks involving sequential data, it is not without its challenges and limitations. One of the main challenges is the difficulty of training these models. The training process requires large amounts of data and computational resources, which can make it inaccessible for many researchers and practitioners.

Another challenge is the problem of long-range dependencies. While the self-attention mechanism in the Transformer architecture allows the model to capture dependencies between different parts of the input data, it can struggle with dependencies that span large distances in the input. This can result in output that is grammatically correct but semantically nonsensical.

Training Difficulties

Training models that use the Encoder-Decoder architecture, particularly LLMs like GPT-3, requires large amounts of data and computational resources. The models need to be trained on millions, if not billions, of sentences to learn the patterns and structures of the language. This requires a significant amount of computational power, making the training process costly and time-consuming.

Furthermore, the training process can be difficult to optimize. The models have millions of parameters that need to be tuned, and finding the right combination of parameters can be a challenging task. This is further complicated by the fact that the models are often trained on diverse and unstructured data, making it difficult to ensure that they are learning the right patterns.

Handling Long-Range Dependencies

Another challenge with the Encoder-Decoder architecture is handling long-range dependencies in the input data. While the self-attention mechanism in the Transformer architecture allows the model to capture dependencies between different parts of the input data, it can struggle with dependencies that span large distances in the input.

This can result in output that is grammatically correct but semantically nonsensical. For instance, the model might generate a sentence that is grammatically correct but does not make sense in the context of the previous sentences. This is a significant limitation, as it can affect the quality of the output and the usefulness of the model in practical applications.

Conclusion

The Encoder-Decoder architecture is a powerful tool in the field of machine learning and artificial intelligence, particularly in the realm of Large Language Models. It allows these models to understand and generate human-like text, making them incredibly useful in a wide range of applications.

However, like any tool, it is not without its challenges and limitations. The difficulty of training these models and the problem of handling long-range dependencies are significant hurdles that researchers and practitioners need to overcome. Despite these challenges, the Encoder-Decoder architecture remains a cornerstone of modern machine learning and a key component of many cutting-edge models.