Sequence modeling is a critical concept in the field of machine learning, particularly in the development and application of Large Language Models (LLMs) like ChatGPT. This comprehensive glossary entry will delve into the intricacies of sequence modeling, its relevance to LLMs, and how it influences the functionality of these models.

As we navigate through the complexities of sequence modeling, we’ll explore its theoretical underpinnings, practical applications, and the challenges it presents. We’ll also examine how it’s used in LLMs, with a special emphasis on ChatGPT, to generate human-like text based on a given input sequence.

Understanding Sequence Modeling

Sequence modeling is a type of machine learning technique where the order of the data points matters. It’s used in various fields, from natural language processing to speech recognition, and it’s particularly important when dealing with time-series data, where the sequence of data points can reveal patterns or trends.

At its core, sequence modeling is about predicting the next item in a sequence, given the previous items. This can be as simple as predicting the next word in a sentence, or as complex as predicting the next move in a chess game. The key is that the model needs to understand the context provided by the previous items in the sequence.

The Importance of Context in Sequence Modeling

Context is a crucial element in sequence modeling. Without context, a model would struggle to make accurate predictions. For instance, in language modeling, the word ‘bank’ could refer to a financial institution or the side of a river, depending on the context. A good sequence model can use the context provided by the previous words in a sentence to make an accurate prediction.

Context is also important in dealing with ambiguity. Many sequences, particularly in language, are ambiguous and can be interpreted in different ways. A sequence model needs to be able to handle this ambiguity and make predictions that are consistent with the most likely interpretation of the sequence.

Types of Sequence Models

There are several types of sequence models, each with its own strengths and weaknesses. Some of the most common types include Hidden Markov Models (HMMs), Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, and Transformer models.

HMMs are a type of probabilistic sequence model that assumes a set of hidden states that generate the observed data. RNNs, on the other hand, use recurrent connections to process sequences, making them capable of handling sequences of arbitrary length. LSTM networks are a type of RNN that use special units to avoid the vanishing gradient problem, which can hinder learning in deep networks. Transformer models, like the one used in ChatGPT, use self-attention mechanisms to weigh the importance of different parts of the sequence when making predictions.

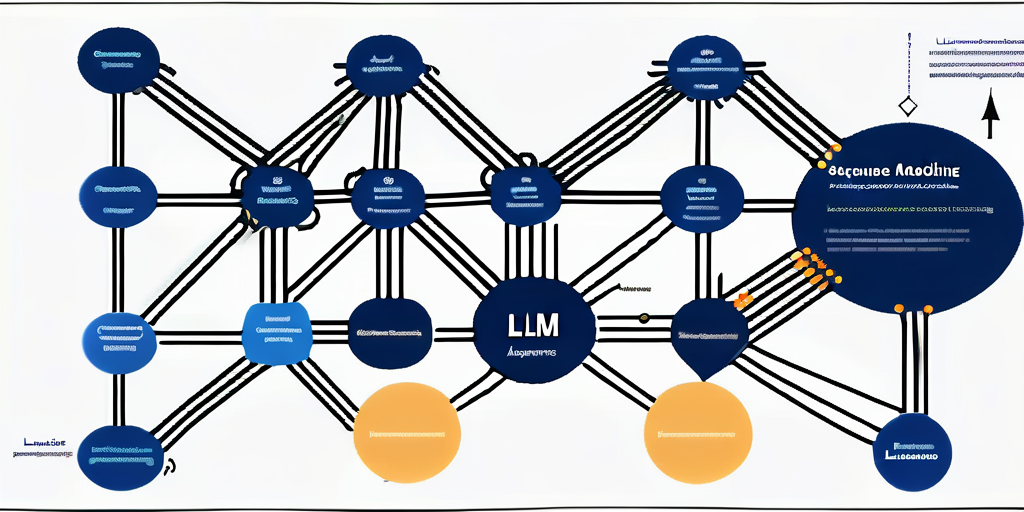

Sequence Modeling in Large Language Models

Large Language Models (LLMs) like ChatGPT use sequence modeling to generate human-like text. They take a sequence of words as input and predict the next word in the sequence. This process is repeated until a complete sentence or paragraph is generated.

The power of LLMs lies in their ability to model the complex dependencies between words in a sequence. They can capture the context of a sentence, understand the meaning of words based on their position in the sequence, and generate text that is coherent and contextually relevant.

Training LLMs with Sequence Modeling

The training process for LLMs involves feeding them large amounts of text data and training them to predict the next word in a sequence. This is done using a technique called maximum likelihood estimation, where the model is trained to maximize the probability of the next word given the previous words in the sequence.

During training, the model learns to recognize patterns in the data, such as the typical order of words in a sentence, the use of certain words in specific contexts, and the grammatical rules of the language. This allows the model to generate text that is not only syntactically correct, but also semantically meaningful.

ChatGPT and Sequence Modeling

ChatGPT, a popular LLM developed by OpenAI, uses sequence modeling to generate human-like text. It’s based on the Transformer model, which allows it to handle long sequences of text and capture complex dependencies between words.

ChatGPT is trained on a diverse range of internet text, which allows it to generate text on a wide variety of topics. However, it’s important to note that ChatGPT doesn’t understand the text in the way humans do. Instead, it learns patterns in the data and uses these patterns to generate text that is likely to follow the input sequence.

We have the largest collection of how to make money with ChatGPT articles on the internet here

- 10 Ways Business Analysts Can Make Side Income Using ChatGPT

- 10 Ways Travel Enthusiasts can make side income Using ChatGPT

- 35 ways to use the Code Interpreter of ChatGPT to make money

- 10 Ways Moms Can Make Side Income Using ChatGPT

- 10 Ways Car Lovers Can Make Side Income Using ChatGPT

- 10 Ways Artists can make extra money Using ChatGPT

- 10 Ways Architects Can Make Side Income Using ChatGPT

- 10 Ways Americans can make money using ChatGPT

- 10 Ways Tech Geeks can make side money Using ChatGPT

Challenges in Sequence Modeling

While sequence modeling is a powerful technique, it’s not without its challenges. One of the main challenges is dealing with long sequences. As the length of the sequence increases, the model needs to maintain a larger context, which can be computationally expensive and can lead to issues like the vanishing gradient problem.

Another challenge is handling ambiguity. Many sequences can be interpreted in different ways, and a model needs to be able to handle this ambiguity and make predictions that are consistent with the most likely interpretation. This requires sophisticated models and large amounts of training data.

Overcoming Challenges in Sequence Modeling

There are several strategies for overcoming these challenges. One approach is to use more sophisticated models, like LSTM networks or Transformer models, that are better equipped to handle long sequences and ambiguity. These models use mechanisms like gating and self-attention to control the flow of information and weigh the importance of different parts of the sequence.

Another approach is to use more training data. The more data a model has to learn from, the better it can model the complexities of the sequence. However, this comes with its own challenges, as collecting and processing large amounts of data can be time-consuming and expensive.

Future of Sequence Modeling in LLMs

The future of sequence modeling in LLMs is promising. With advances in model architecture, training techniques, and hardware, we can expect to see LLMs that are capable of generating even more realistic and contextually relevant text.

However, as these models become more powerful, they also raise important ethical and societal questions. For instance, how do we ensure that these models are used responsibly and don’t amplify harmful biases in the data? These are important questions that the research community is actively working to address.

Advancements in Sequence Modeling

There are several areas where we can expect to see advancements in sequence modeling. One area is in the development of more sophisticated models that can better handle long sequences and ambiguity. For instance, we might see models that use more advanced attention mechanisms or that incorporate external knowledge sources to improve their predictions.

Another area of advancement is in the training process. With advances in hardware and training techniques, we can train models on larger and more diverse datasets, which can help them better capture the complexities of the sequence.

Ethical Considerations in Sequence Modeling

As sequence modeling becomes more prevalent, it’s important to consider the ethical implications. For instance, how do we ensure that these models don’t amplify harmful biases in the data? How do we ensure that they are used responsibly and don’t generate harmful or misleading content?

These are complex questions that don’t have easy answers. However, they are important to consider as we continue to develop and deploy these powerful models. By keeping these considerations in mind, we can work towards a future where sequence modeling is used responsibly and to the benefit of all.