The Variational Autoencoder (VAE) is a type of autoencoder, a neural network used in machine learning and artificial intelligence that is designed to learn efficient data codings in an unsupervised manner. The primary aim of an autoencoder is to learn a representation (encoding) for a set of data, typically for the purpose of dimensionality reduction. However, VAEs take this a step further by making the encoding process probabilistic.

VAEs are a cornerstone of modern machine learning, particularly in the field of generative modeling. They have been used to produce state-of-the-art results in tasks such as image synthesis, anomaly detection, and reinforcement learning. Understanding the principles behind VAEs is essential for anyone interested in the cutting-edge of machine learning research and application.

Understanding Autoencoders

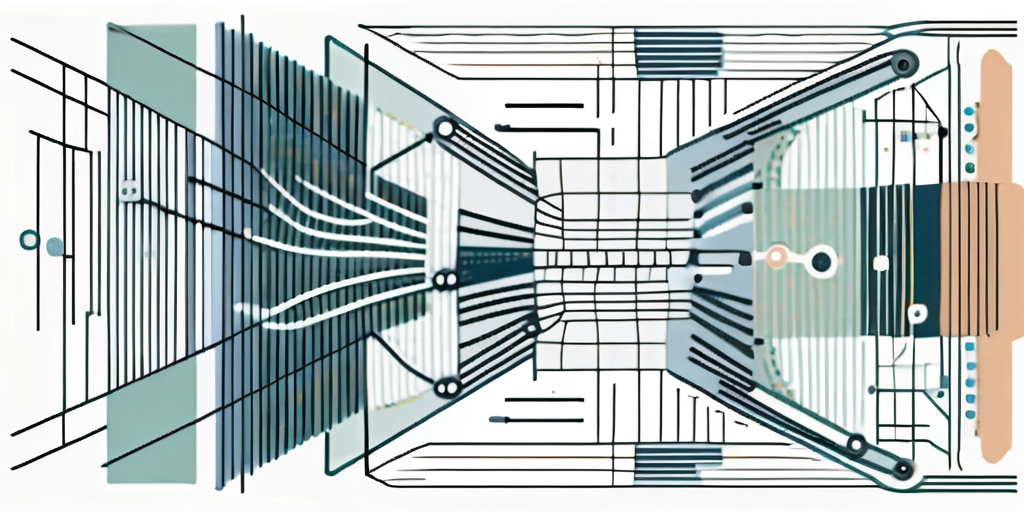

Before delving into the specifics of Variational Autoencoders, it’s important to understand the concept of an autoencoder. In essence, an autoencoder is a neural network that is trained to attempt to copy its input to its output. This might seem trivial, but the network has a hidden layer that is smaller than the input layer, which forces the network to learn a compressed representation of the input data.

This compressed representation is often referred to as the ‘bottleneck’ layer, as it restricts the amount of information that can pass through the network. By training the network to reproduce the input data from this bottleneck layer, the network learns to encode the most important features of the data in the bottleneck layer, and to decode this representation back into the original data.

Encoder and Decoder

An autoencoder consists of two main components: an encoder and a decoder. The encoder takes the input data and compresses it into a lower-dimensional representation. This is typically achieved by passing the input data through a series of layers that progressively decrease in size.

The decoder takes the compressed representation produced by the encoder and attempts to reconstruct the original input data. This is typically achieved by passing the compressed representation through a series of layers that progressively increase in size, mirroring the structure of the encoder.

Training an Autoencoder

Autoencoders are trained using backpropagation, much like any other neural network. The difference is that instead of using a separate set of target outputs, the target outputs for an autoencoder are the inputs themselves. This means that the network is trained to minimize the difference between the input data and the reconstructed data produced by the decoder.

This training process forces the autoencoder to learn a compressed representation of the input data that retains as much of the original information as possible. This makes autoencoders particularly useful for tasks such as dimensionality reduction, where the goal is to reduce the size of the data while preserving its underlying structure.

Introduction to Variational Autoencoders

While traditional autoencoders can learn to compress data and reconstruct it, they lack a crucial ability that makes them less useful for many tasks: they cannot generate new data. This is where Variational Autoencoders come in. VAEs are a type of generative model, which means they can learn to generate new data that is similar to the data they were trained on.

The key innovation of VAEs is that they do not just learn a fixed compressed representation of the input data. Instead, they learn a distribution over possible representations. This means that instead of producing a single output for a given input, a VAE can produce a range of outputs, each with a certain probability.

Probabilistic Encoding

In a VAE, the encoder does not produce a single compressed representation for each input. Instead, it produces two things: a mean and a standard deviation. These two values define a Gaussian distribution, which is used to generate the actual compressed representation.

This process is known as probabilistic encoding, and it is what allows VAEs to generate new data. By sampling different values from the Gaussian distribution for a given input, the VAE can produce different outputs, each representing a plausible reconstruction of the input data.

Reparameterization Trick

The reparameterization trick is a key component of VAEs that allows them to be trained efficiently. The problem with probabilistic encoding is that it introduces randomness into the encoding process, which makes it difficult to use backpropagation to train the network. The reparameterization trick solves this problem by separating the randomness from the parameters of the network.

In a VAE, the encoder produces a mean and a standard deviation for each input. These values are then used to generate a random sample from a Gaussian distribution. The reparameterization trick involves treating this random sample as a parameter of the network, rather than as a random variable. This allows the network to be trained using backpropagation, as the randomness is now separate from the parameters of the network.

Applications of Variational Autoencoders

VAEs have a wide range of applications in the field of machine learning and artificial intelligence. One of the most common uses of VAEs is in generative modeling, where they are used to generate new data that is similar to the data they were trained on. This can be used for tasks such as image synthesis, where the goal is to generate new images that look like they could have come from the same dataset as the training data.

Another common use of VAEs is in anomaly detection. Because VAEs are trained to reconstruct their input data, they can be used to detect anomalies by looking for inputs that they are unable to reconstruct accurately. This can be particularly useful in fields such as cybersecurity, where anomaly detection can be used to identify unusual patterns of behavior that may indicate a security threat.

Image Synthesis

One of the most exciting applications of VAEs is in the field of image synthesis. By training a VAE on a dataset of images, it is possible to generate new images that are similar to the ones in the training set. This can be used for a wide range of tasks, from generating new examples of a particular class of images, to creating entirely new images that have never been seen before.

The ability to generate new images is particularly useful in fields such as computer graphics and animation, where it can be used to create realistic textures and backgrounds. It can also be used in data augmentation, where it can be used to generate new training data to improve the performance of a machine learning model.

Anomaly Detection

Another important application of VAEs is in anomaly detection. Because VAEs are trained to reconstruct their input data, they can be used to detect anomalies by looking for inputs that they are unable to reconstruct accurately. This can be particularly useful in fields such as cybersecurity, where anomaly detection can be used to identify unusual patterns of behavior that may indicate a security threat.

In a typical anomaly detection scenario, a VAE would be trained on a dataset of normal behavior. Then, when a new piece of data is encountered, the VAE can attempt to reconstruct it. If the reconstruction error is high, this indicates that the new data is different from the normal behavior, and may therefore be an anomaly.

Conclusion

VAEs represent a significant advancement in the field of machine learning and artificial intelligence. By combining the power of autoencoders with the flexibility of probabilistic modeling, VAEs are able to generate new data that is similar to the data they were trained on, making them a powerful tool for tasks such as image synthesis and anomaly detection.

While the mathematical details of VAEs can be complex, the underlying principles are relatively straightforward. By understanding these principles, it is possible to gain a deep understanding of how VAEs work and how they can be used in practice. Whether you’re a machine learning researcher, a data scientist, or just a curious observer, VAEs offer a fascinating glimpse into the future of artificial intelligence.