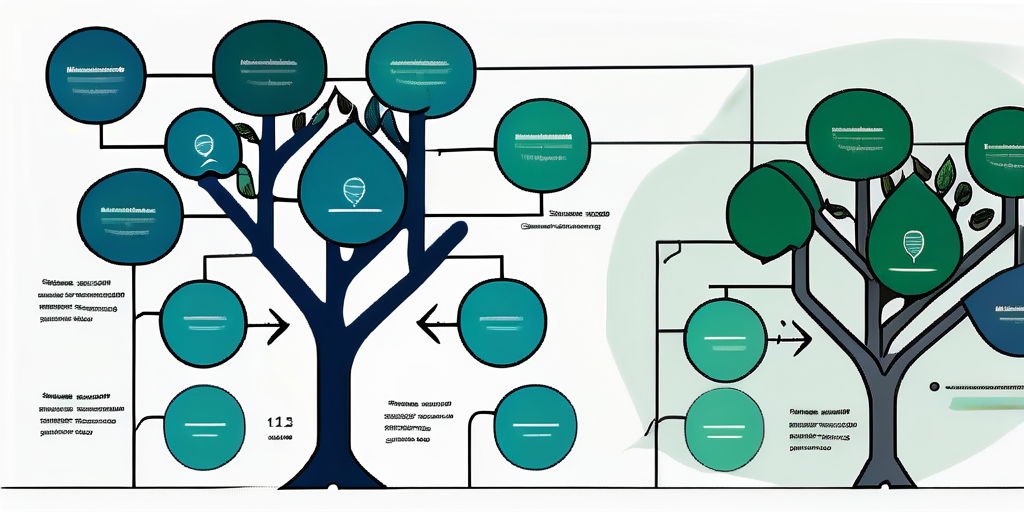

In the realm of artificial intelligence (AI), a decision tree is a powerful and versatile tool used for both classification and regression tasks. In essence, it’s a flowchart-like structure where each internal node represents a feature (or attribute), each branch represents a decision rule, and each leaf node represents an outcome. The topmost node in a decision tree is known as the root node. It learns to partition on the basis of the attribute value. It partitions the tree recursively in a manner called recursive partitioning.

This article will delve into the intricate details of decision trees, their structure, how they function, their applications, and their advantages and disadvantages. We will also explore how decision trees are used in artificial intelligence, and how they contribute to the development of machine learning and data mining techniques.

Understanding the Structure of a Decision Tree

The decision tree structure is a binary tree structure where each node represents a certain attribute in a dataset and each leaf node represents a decision on the numerical target. The tree is built in a top-down recursive divide-and-conquer manner. The decision of making strategic splits heavily affects a tree’s accuracy. The decision criteria are different for classification and regression trees.

Decision trees use multiple algorithms to decide to split a node into two or more sub-nodes. The creation of sub-nodes increases the homogeneity of resultant sub-nodes. In other words, we can say that the purity of the node increases with respect to the target variable. Decision tree splits the nodes on all available variables and then selects the split which results in most homogeneous sub-nodes.

Root Node

The root node is the starting point of any decision tree. This is the node from which all possible outcomes and decisions branch out. The root node is selected based on certain algorithms which we will discuss later in this article. The root node essentially represents the entire sample or population being analyzed, and it gets further divided into two or more homogeneous sets.

It’s crucial to select an appropriate root node because it affects the structure and effectiveness of the decision tree. The root node should ideally be a feature that best splits the dataset into distinct and homogeneous subsets. The selection of the root node is based on statistical methods, and different algorithms use different criteria for this selection.

Decision Nodes

Following the root node, we have decision nodes. These nodes represent the decisions that we will make based on certain conditions or rules. Each decision node has two or more branches, each representing a possible decision. The decision nodes are where the decision tree algorithm makes strategic splits.

The decision nodes are selected based on certain criteria, such as entropy and the Gini index. These criteria measure the impurity of the input, and the decision tree algorithm aims to increase the purity of the input with each split. The decision nodes continue to branch out until a certain stopping condition is met.

Leaf Nodes

Leaf nodes, also known as terminal nodes, represent the final decisions or outcomes of the decision tree. Once the decision tree reaches a leaf node, no further splitting occurs. The leaf node contains the final decision, which is the output of the decision tree.

Each path from the root node to a leaf node represents a rule or decision path. The number of leaf nodes in a decision tree can vary based on the complexity of the dataset and the depth of the tree. The depth of the tree is a measure of the longest path from the root node to a leaf node.

Algorithms Used in Decision Trees

There are several popular algorithms used to construct decision trees. These algorithms use different metrics to determine the best split at each node. The most commonly used algorithms are ID3 (Iterative Dichotomiser 3), C4.5 (successor of ID3), CART (Classification and Regression Trees), and CHAID (Chi-squared Automatic Interaction Detector).

Each of these algorithms has its own strengths and weaknesses, and they are chosen based on the specific requirements of the task. For example, ID3 and C4.5 are typically used for tasks where the target variable is categorical, while CART is used for both categorical and continuous target variables.

ID3 Algorithm

The ID3 algorithm was one of the earliest algorithms used for decision tree construction. It uses entropy and information gain as metrics to determine the best split. The ID3 algorithm starts with the original set as the root node, and then it iteratively divides the data based on the attribute that results in the highest information gain.

One of the major limitations of the ID3 algorithm is that it doesn’t handle numerical attributes well. It also doesn’t handle missing values, and it tends to overfit the data. Despite these limitations, the ID3 algorithm laid the foundation for many of the decision tree algorithms that followed.

C4.5 Algorithm

The C4.5 algorithm is an extension of the ID3 algorithm, and it addresses some of the limitations of ID3. Like ID3, it uses entropy and information gain to determine the best split, but it also includes a normalization factor known as ‘gain ratio’ to handle the bias towards multi-valued attributes.

The C4.5 algorithm can handle both categorical and numerical attributes, and it can also handle missing values. It also includes a pruning step to reduce overfitting. The C4.5 algorithm is one of the most widely used decision tree algorithms, and it’s known for its robustness and flexibility.

CART Algorithm

The CART algorithm is another popular decision tree algorithm that can handle both classification and regression tasks. Unlike ID3 and C4.5, the CART algorithm uses the Gini index as a metric to determine the best split. The Gini index measures the impurity of a node, and the CART algorithm aims to minimize the Gini index with each split.

The CART algorithm also includes a pruning step to prevent overfitting. It creates a large tree and then prunes it back to find an optimal size. The CART algorithm is known for its simplicity and effectiveness, and it’s widely used in machine learning and data mining.

Applications of Decision Trees

Decision trees are widely used in various fields due to their simplicity and interpretability. They are used in machine learning for both classification and regression tasks. In data mining, decision trees are used for data exploration and pattern recognition. They are also used in operations research for decision analysis and strategic planning.

Some specific applications of decision trees include customer segmentation, fraud detection, medical diagnosis, credit risk analysis, and many more. Decision trees are particularly useful in situations where we need to make a series of decisions, and each decision leads to a different outcome.

Machine Learning

In machine learning, decision trees are used as a predictive modeling tool. They are used for both supervised learning tasks, where the target variable is known, and unsupervised learning tasks, where the target variable is unknown. Decision trees are particularly effective for tasks where the relationships between the variables are non-linear and complex.

Decision trees are also used as a base learner in ensemble methods, such as random forests and boosting. Ensemble methods combine the predictions of multiple base learners to improve the overall prediction accuracy. Decision trees are a popular choice for base learners because they can capture complex interactions between variables, and they are easy to interpret.

Data Mining

In data mining, decision trees are used for data exploration and pattern recognition. They are used to identify patterns and relationships in large datasets that may not be apparent through other methods. Decision trees can handle both categorical and numerical data, and they can handle missing values, making them a versatile tool for data mining.

Decision trees are also used in association rule mining, which is a method for discovering interesting relationships in large datasets. For example, in market basket analysis, decision trees can be used to identify items that are frequently purchased together.

Advantages and Disadvantages of Decision Trees

Like any other machine learning algorithm, decision trees have their advantages and disadvantages. One of the main advantages of decision trees is their simplicity and interpretability. Decision trees are easy to understand and interpret, and they can be visualized, which makes them a great tool for exploratory data analysis.

Another advantage of decision trees is their flexibility. They can handle both categorical and numerical data, and they can handle missing values. Decision trees can also capture non-linear relationships between variables, which makes them a powerful tool for predictive modeling.

Advantages

One of the main advantages of decision trees is their simplicity. They are easy to understand and interpret, even for people without a background in data science. This makes them a great tool for exploratory data analysis and decision making.

Another advantage of decision trees is their flexibility. They can handle both categorical and numerical data, and they can handle missing values. This makes them a versatile tool for data analysis. Furthermore, decision trees can capture non-linear relationships between variables, which makes them a powerful tool for predictive modeling.

Disadvantages

Despite their advantages, decision trees also have some disadvantages. One of the main disadvantages is their tendency to overfit the data. Overfitting occurs when the decision tree is too complex and it captures the noise in the data. This can lead to poor generalization performance on unseen data.

Another disadvantage of decision trees is their instability. Small changes in the data can lead to a completely different tree. This can be mitigated by using ensemble methods, such as random forests, which average the predictions of multiple decision trees to improve stability and prediction accuracy.

Conclusion

Decision trees are a powerful tool in the field of artificial intelligence, particularly in machine learning and data mining. They are simple to understand and interpret, and they can handle both categorical and numerical data, making them a versatile tool for data analysis.

Despite their advantages, decision trees also have some limitations, such as their tendency to overfit the data and their instability. However, these limitations can be mitigated by using ensemble methods and by tuning the parameters of the decision tree algorithm.

In conclusion, decision trees are a fundamental component of artificial intelligence, and they continue to play a crucial role in the development of machine learning and data mining techniques.