In the realm of artificial intelligence and machine learning, the term ‘Random Forest’ is a frequently used phrase. It refers to a versatile machine learning method capable of performing both regression and classification tasks. Additionally, it is used for dimensionality reduction, outlier detection, and more. This model is a collection of decision trees, hence the name ‘Forest’. This article aims to provide a comprehensive understanding of the Random Forest model.

Random Forest is a type of ensemble learning method, where a group of weak models come together to form a strong model. In machine learning, it’s always beneficial to combine multiple models to improve accuracy, and Random Forest is a perfect example of this. It creates a set of decision trees from a randomly selected subset of the training set, which then aggregates the votes from different decision trees to decide the final class of the test object.

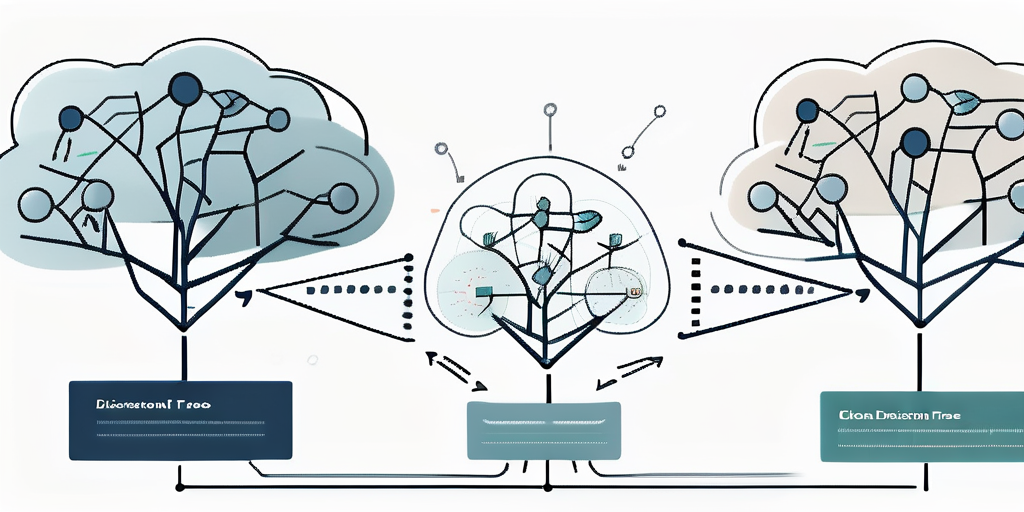

Understanding Decision Trees

Before diving into the Random Forest model, it’s crucial to understand the concept of decision trees, the building blocks of any Random Forest. A decision tree is a flowchart-like structure in which each internal node represents a feature (or attribute), each branch represents a decision rule, and each leaf node represents an outcome. The topmost node in a decision tree is known as the root node. It learns to partition based on the attribute value. It partitions the tree recursively in a manner called recursive partitioning.

Decision trees are easy to understand and interpret, making them a popular choice for many. However, they are prone to overfitting, especially when the tree is particularly deep. This is where Random Forest comes in – by creating multiple decision trees and aggregating their results, Random Forest manages to minimize overfitting while maintaining a high level of accuracy.

Types of Decision Trees

There are mainly two types of Decision Trees: Categorical Variable Decision Tree and Continuous Variable Decision Tree. Categorical Variable Decision Trees are those with categorical target variables, while Continuous Variable Decision Trees have continuous target variables. The methodology to increase tree from training data of these two types of decision trees is different.

For a Categorical Variable Decision Tree, we use the Gini method to create binary splits. In a Continuous Variable Decision Tree, reduction in variance is the methodology used for creating binary splits. After creating splits, the decision tree is pruned to avoid overfitting of data.

Working of Random Forest

The Random Forest algorithm works in four basic steps: selection of random samples from a given dataset, construction of a decision tree for each sample and prediction of the result by each tree, voting by each decision tree for predicting the final output, and the model predicting the final output based on the majority voting principle.

Random Forest adds additional randomness to the model, while growing the trees. Instead of searching for the most important feature while splitting a node, it searches for the best feature among a random subset of features. This results in a wide diversity that generally results in a better model. Therefore, in Random Forest, only a random subset of the features is taken into consideration by the algorithm for splitting a node. You can even make trees more random by additionally using random thresholds for each feature rather than searching for the best possible thresholds (like a normal decision tree does).

Feature Importance

One of the great things about Random Forest is that it can be used to measure the relative importance of each feature on the prediction. Sklearn provides a great tool for this, which measures a features importance by looking at how much the tree nodes that use that feature reduce impurity on average (across all trees in the forest). It computes this score automatically for each feature after training and scales the results so that the sum of all importances is equal to 1.

This is one of the reasons why Random Forest is considered as a very handy and easy to use algorithm, because it’s quite easy to see what is really affecting the predictions.

Advantages of Random Forest

Random Forest has several advantages. Firstly, it is one of the most accurate learning algorithms available. For many data sets, it produces a highly accurate classifier. Secondly, it runs efficiently on large databases. It can handle thousands of input variables without variable deletion. It gives estimates of what variables are important in the classification.

Furthermore, it has an effective method for estimating missing data and maintains accuracy when a large proportion of the data are missing. It has methods for balancing error in class population unbalanced data sets. Lastly, it generates an internal unbiased estimate of the generalization error as the forest building progresses.

Overfitting and Random Forest

Overfitting is a common problem in machine learning which can occur in most models. Overfitting happens when the learning algorithm continues to develop hypotheses that reduce training set error at the cost of an increased test set error. In other words, it fits the model too well to the training data and performs poorly on unseen data.

Random Forest prevents this issue from happening most of the time. By creating random subsets of the features and building smaller trees using these subsets, Random Forest algorithm can maintain a high level of accuracy without suffering from the overfitting problem.

Disadvantages of Random Forest

Despite its numerous advantages, Random Forest also has some disadvantages. It surely does a good job at classification but not as good for regression problem as it does not give precise continuous nature prediction. In case of regression, it doesn’t predict beyond the range in the training data, and that they may over-fit data sets that are particularly noisy.

Another limitation of Random Forest is that it can feel like a black box approach for a statistical modelers we have very little control on what the model does. You can at best try different parameters and random seeds.

Complexity and Resource Consumption

Random Forest creates a lot of trees (unlike only one tree in case of decision tree) and combines their outputs. By default, it creates 100 trees in Python sklearn library. To do so, this algorithm requires much more computational power and resources. On the other hand, the model takes a lot of time to train as compared to decision trees.

Moreover, due to the ensemble of decision trees, it also requires more computational resources for training and predicting both. This algorithm is fast to train, but quite slow to create predictions once trained. More accurate ensembles require more trees, which means using the model becomes slower.

Applications of Random Forest

Random Forest algorithm is widely used in different fields such as banking, stock market, medicine and e-commerce. In Banking it is used for example to detect customers who will use the bank’s services more frequently than others and repay their debt in time. In this case, data scientists use results of random forest algorithm prediction to analyze the behavior of customers.

In finance, it is used to determine a stock’s behaviour in the future. In the healthcare sector, it is used to identify the correct combination of components in medicine and to analyze a patient’s medical history to identify diseases. And lastly, in e-commerce, Random Forest is used to determine whether a customer will actually like the product or not.

Real World Examples

One of the most prominent applications of Random Forest is Aircraft Engine Fault Diagnosis. Data scientists at NASA used Random Forest to determine the remaining useful life of aircraft engines. They used sensor data to predict when an aircraft engine would fail in the future so that maintenance could be planned ahead.

Another interesting application is in the field of genetics, where Random Forest was used for predicting disease status based on DNA sequence data. It was found to be very effective in identifying the most important sequences and it has been suggested that the method could be used to predict disease risk in future studies.

Conclusion

Random Forest is a powerful algorithm in machine learning. It can solve both type of problems i.e., classification and regression and does a decent estimation at both fronts. One of the big problems in machine learning is overfitting, but most of the time this won’t happen that easy to a random forest classifier. That’s because if there are enough trees in the forest, the classifier won’t overfit the model.

The algorithm is parallelizable, meaning that we can split the process to multiple machines to run. This results in faster computation time. Despite the fact that it takes a lot of computation power and resources, considering the model performance, it’s worth trying it on your data set.