Joint embedding is a crucial concept in the realm of Large Language Models (LLMs), such as ChatGPT. It refers to the process of mapping two different types of data into a common vector space, enabling the model to understand and generate human-like text based on the input it receives. This article delves into the intricacies of joint embedding, providing a comprehensive understanding of its role in LLMs.

LLMs, like ChatGPT, have revolutionized the field of natural language processing (NLP) by demonstrating an unprecedented ability to understand and generate human-like text. This capability is largely attributed to the use of joint embedding, which allows these models to map different types of data into a common vector space. This article will explore the concept of joint embedding in depth, providing a comprehensive understanding of its role in LLMs.

Understanding Joint Embedding

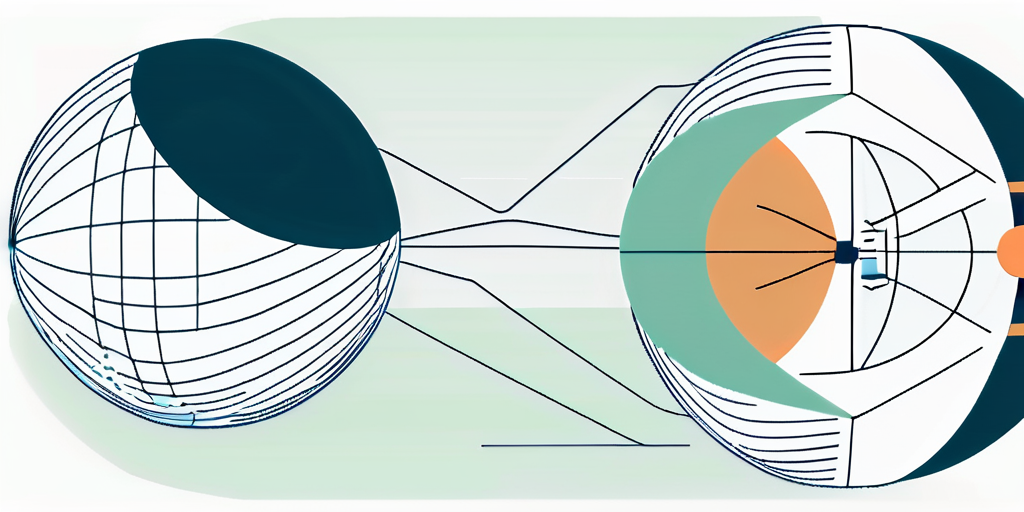

Joint embedding is a technique used in machine learning, particularly in the field of natural language processing. It involves mapping two different types of data into a common vector space. This mapping allows the model to understand the relationship between these two types of data and make predictions based on this understanding.

In the context of LLMs, joint embedding often involves mapping words or phrases and their corresponding vectors. This mapping allows the model to understand the semantic relationship between different words or phrases, enabling it to generate human-like text.

Role of Joint Embedding in LLMs

Joint embedding plays a pivotal role in the functioning of LLMs. It allows these models to understand and generate human-like text by mapping different types of data into a common vector space. This mapping enables the model to understand the semantic relationship between different words or phrases, which is crucial for generating human-like text.

Without joint embedding, LLMs would struggle to understand the relationship between different words or phrases. This would limit their ability to generate human-like text, making them less effective at tasks such as text generation, translation, and sentiment analysis.

Benefits of Joint Embedding

Joint embedding offers several benefits in the context of LLMs. First, it allows these models to understand the semantic relationship between different words or phrases. This understanding is crucial for tasks such as text generation, translation, and sentiment analysis.

Second, joint embedding allows LLMs to handle a wide range of data types. This flexibility is crucial for tasks that involve diverse types of data, such as image captioning and multimodal tasks.

Joint Embedding Techniques

There are several techniques for implementing joint embedding in LLMs. These techniques generally involve mapping different types of data into a common vector space. The specific technique used can vary depending on the task at hand and the specific requirements of the model.

Some common techniques for joint embedding include canonical correlation analysis (CCA), deep canonical correlation analysis (DCCA), and dual encoder models. Each of these techniques has its own strengths and weaknesses, and the choice of technique can have a significant impact on the performance of the LLM.

Canonical Correlation Analysis (CCA)

Canonical correlation analysis (CCA) is a statistical method used to understand the relationship between two sets of variables. In the context of joint embedding, CCA can be used to map two different types of data into a common vector space. This mapping allows the model to understand the relationship between these two types of data and make predictions based on this understanding.

CCA has been used successfully in several applications of joint embedding, including text generation, translation, and sentiment analysis. However, it has some limitations, including the assumption of linear relationships between variables and the need for large amounts of data.

Deep Canonical Correlation Analysis (DCCA)

Deep canonical correlation analysis (DCCA) is an extension of CCA that uses deep learning techniques to map two different types of data into a common vector space. DCCA can handle non-linear relationships between variables, making it a more flexible and powerful tool for joint embedding.

Like CCA, DCCA has been used successfully in several applications of joint embedding. However, it also has some limitations, including the need for large amounts of data and the complexity of the training process.

Applications of Joint Embedding in LLMs

Joint embedding has a wide range of applications in LLMs. These applications generally involve tasks that require the model to understand the relationship between different types of data and generate human-like text based on this understanding.

Some common applications of joint embedding in LLMs include text generation, translation, sentiment analysis, image captioning, and multimodal tasks. Each of these applications has its own unique challenges and requirements, and the choice of joint embedding technique can have a significant impact on the performance of the model.

Text Generation

Text generation is a common application of joint embedding in LLMs. In this context, joint embedding allows the model to understand the semantic relationship between different words or phrases, enabling it to generate human-like text.

Joint embedding is particularly useful for tasks that involve generating text based on a given input. For example, in a chatbot application, joint embedding can allow the model to generate appropriate responses based on the user’s input.

Translation

Translation is another common application of joint embedding in LLMs. In this context, joint embedding allows the model to understand the semantic relationship between words or phrases in different languages, enabling it to translate text from one language to another.

Joint embedding is particularly useful for tasks that involve translating text between languages that have different grammatical structures or vocabulary. For example, in a translation application, joint embedding can allow the model to translate text from English to Chinese, despite the significant differences between these two languages.

Challenges and Limitations of Joint Embedding

Despite its many benefits, joint embedding also has some challenges and limitations. These challenges generally involve the complexity of the mapping process, the need for large amounts of data, and the difficulty of handling non-linear relationships between variables.

Understanding these challenges and limitations is crucial for effectively implementing joint embedding in LLMs. By addressing these challenges, researchers and practitioners can improve the performance of these models and expand their range of applications.

Complexity of the Mapping Process

The process of mapping two different types of data into a common vector space can be complex. This complexity can make it difficult to implement joint embedding in LLMs, particularly for tasks that involve diverse types of data or non-linear relationships between variables.

Despite this challenge, several techniques have been developed to simplify the mapping process. These techniques, including CCA and DCCA, can help to reduce the complexity of the mapping process and make joint embedding more accessible for LLMs.

Need for Large Amounts of Data

Joint embedding often requires large amounts of data. This need for data can make it difficult to implement joint embedding in LLMs, particularly for tasks that involve rare or specialized types of data.

Despite this challenge, several techniques have been developed to handle data scarcity. These techniques, including data augmentation and transfer learning, can help to reduce the need for large amounts of data and make joint embedding more feasible for LLMs.

Looking for more inspiration 📖

- Sam Altman’s secrets on how to generate ideas for any business

- 6 Greg Brockman Secrets on How to Learn Anything – blog

- The secrets behind the success of Mira Murati – finally revealed

- Ideas to Make Money with ChatGPT (with prompts)

- Ilya Sutskever and his AI passion: 7 Deep Dives into his mind

- The “secret” Sam Altman blog post that will change your life

- 4 Life-Changing Lessons we can learn from John McCarthy – one of AI’s Founding Fathers

- Decoding Elon Musk: 5 Insights Into His Personality Type Through Real-Life Examples

Future Directions for Joint Embedding

The field of joint embedding is still evolving, and there are many exciting directions for future research. These directions generally involve improving the performance of joint embedding techniques, expanding their range of applications, and addressing their challenges and limitations.

By pursuing these directions, researchers and practitioners can continue to push the boundaries of what is possible with joint embedding, opening up new possibilities for LLMs and other applications of machine learning.

Improving Performance

One exciting direction for future research involves improving the performance of joint embedding techniques. This could involve developing new techniques that can handle more complex relationships between variables, or improving existing techniques to make them more efficient or accurate.

By improving the performance of joint embedding techniques, researchers and practitioners can make LLMs more effective at tasks such as text generation, translation, and sentiment analysis. This could open up new possibilities for these models and expand their range of applications.

Expanding Applications

Another exciting direction for future research involves expanding the range of applications for joint embedding. This could involve applying joint embedding to new types of data, or using it for new tasks that have not been explored before.

By expanding the range of applications for joint embedding, researchers and practitioners can discover new uses for LLMs and other applications of machine learning. This could lead to new insights and breakthroughs in fields such as natural language processing, computer vision, and artificial intelligence.