In the realm of artificial intelligence and machine learning, Xavier Initialization, also known as Glorot Initialization, is a weight initialization technique designed to optimize neural networks. This technique was named after Xavier Glorot, one of the first researchers to highlight the importance of proper weight initialization in deep learning models. The Xavier Initialization method is a strategy to set the initial random weights of neural networks in a way that significantly improves the performance of the model.

Understanding Xavier Initialization requires a basic understanding of neural networks and their architecture. Neural networks are a series of algorithms that are designed to recognize patterns. They interpret sensory data through a kind of machine perception, labeling or clustering raw input. The patterns they recognize are numerical, contained in vectors, into which all real-world data, be it images, sound, text or time series, must be translated.

The Importance of Weight Initialization

In a neural network, weights are parameters that determine the strength of the connection between two neurons. When a neural network is initialized, these weights are set to random values. The choice of these initial weights can have a significant impact on the learning process and the final performance of the network. If the weights are initialized too large or too small, it can lead to slower convergence or even prevent the network from learning altogether.

Proper weight initialization helps in setting the weights to optimal values, which can speed up the learning process. It can also prevent the problem of vanishing and exploding gradients, which are common issues in training deep neural networks. The vanishing gradients problem occurs when the gradients of the loss function become too small, slowing down the learning process or causing it to stop altogether. The exploding gradients problem, on the other hand, occurs when the gradients become too large, causing the learning process to become unstable.

Vanishing and Exploding Gradients

The vanishing gradients problem is particularly troublesome in deep networks with many layers. As the error is backpropagated from the output layer to the input layer, it is multiplied by the weight of each layer. If the weights are small, the error can become exponentially small, effectively vanishing. This means that the weights in the earlier layers of the network learn very slowly or not at all, making the network difficult to train.

The exploding gradients problem is the opposite of the vanishing gradients problem. Instead of the gradients becoming too small, they become too large. This can cause the learning process to become unstable, as the weights are updated with very large values, causing the network to fail to converge to a good solution.

Xavier Initialization Explained

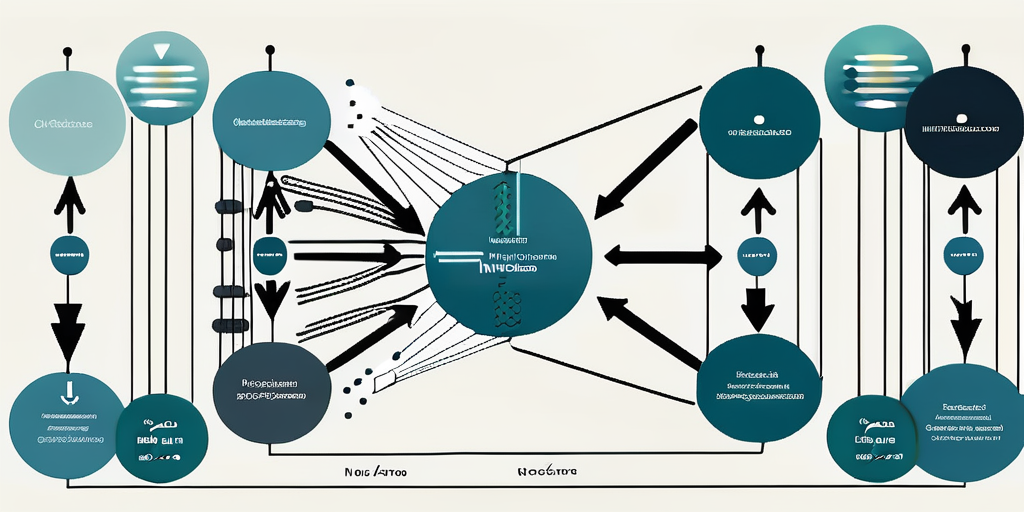

Xavier Initialization is a method designed to combat these problems by initializing the weights in such a way that the variance of the outputs of each neuron is the same as the variance of its inputs. This is done by setting the initial weights to values drawn from a distribution with zero mean and a specific variance. The specific variance is determined by the number of input and output connections of the neuron.

The formula for Xavier Initialization is as follows: the weights are initialized from a random uniform distribution in the range [-sqrt(6/(n_i + n_o)), sqrt(6/(n_i + n_o))], where n_i is the number of input neurons and n_o is the number of output neurons. This range ensures that the weights are small enough to avoid the exploding gradients problem, but large enough to avoid the vanishing gradients problem.

Benefits of Xavier Initialization

One of the main benefits of Xavier Initialization is that it can significantly speed up the learning process. By setting the initial weights to optimal values, it can help the network to converge faster. This can be particularly beneficial in deep learning models, which can be very computationally intensive and time-consuming to train.

Another benefit of Xavier Initialization is that it can help to prevent the vanishing and exploding gradients problems. By ensuring that the variance of the outputs of each neuron is the same as the variance of its inputs, it can help to ensure that the gradients remain within a manageable range, making the network easier to train.

Limitations of Xavier Initialization

While Xavier Initialization can be very effective, it is not without its limitations. One of the main limitations is that it assumes that the activation function is linear. However, in many neural networks, the activation function is non-linear. This can cause the variance of the outputs to be different from the variance of the inputs, which can lead to the vanishing or exploding gradients problem.

Another limitation of Xavier Initialization is that it does not take into account the specific architecture of the network. Different architectures may require different initialization strategies. For example, convolutional neural networks, which are commonly used in image recognition tasks, may benefit from a different initialization strategy.

Alternatives to Xavier Initialization

Given the limitations of Xavier Initialization, several alternatives have been proposed. One of the most popular alternatives is He Initialization, which was specifically designed for networks with ReLU activation functions. He Initialization initializes the weights in a similar way to Xavier Initialization, but it uses a different formula to calculate the variance.

Another alternative is LeCun Initialization, which is similar to Xavier Initialization but uses a different formula to calculate the variance. LeCun Initialization is often used in networks with sigmoid activation functions.

Conclusion

In conclusion, Xavier Initialization is a powerful tool for improving the performance of neural networks. By setting the initial weights to optimal values, it can speed up the learning process and help to prevent the vanishing and exploding gradients problems. However, it is not without its limitations, and other initialization strategies may be more appropriate depending on the specific architecture and activation function of the network.

Despite these limitations, Xavier Initialization remains a popular choice for weight initialization in many deep learning models. Its simplicity and effectiveness make it a valuable tool in the arsenal of any machine learning practitioner.