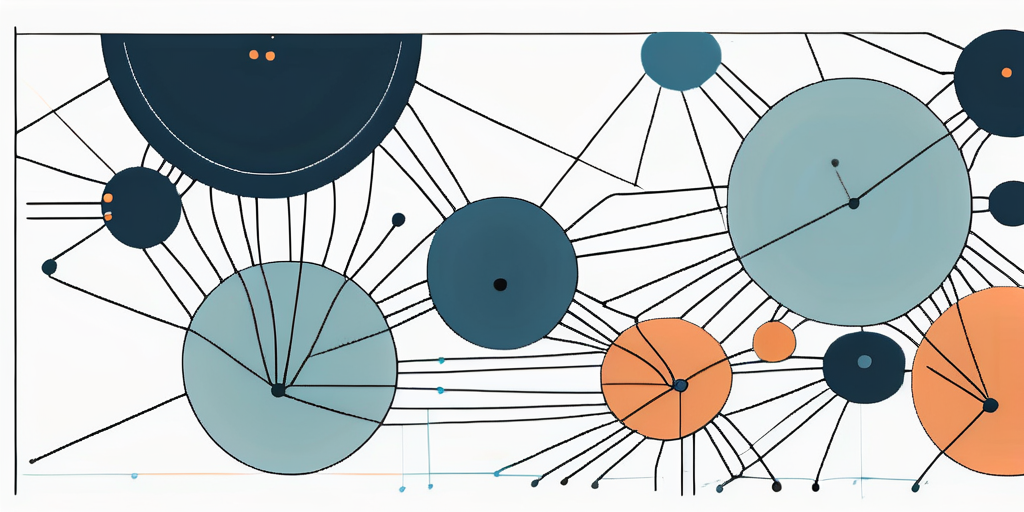

In the realm of Artificial Intelligence (AI), K-means clustering is a popular and versatile unsupervised learning algorithm. It is used to partition a dataset into distinct groups or clusters, where observations within each group are similar to each other based on a predefined similarity measure. This algorithm is widely used in various fields, including machine learning, data mining, pattern recognition, image analysis, information retrieval, bioinformatics, and more.

The term ‘K-means’ is derived from the statistical term ‘mean’ and ‘K’ which represents the number of clusters. The ‘mean’ in K-means refers to the centroid or the center point of each cluster. The algorithm works by assigning each data point to the nearest centroid, and then updating the centroid’s position based on the positions of the assigned points. This process is repeated until the positions of the centroids no longer change significantly, indicating that the algorithm has converged.

Understanding the K-means Algorithm

The K-means algorithm starts by randomly initializing ‘K’ centroids, where ‘K’ is a user-defined parameter representing the number of clusters. Each data point is then assigned to the nearest centroid based on a distance measure, typically the Euclidean distance. The position of each centroid is then updated to be the mean position of all data points assigned to it. This process of assignment and update is repeated until the positions of the centroids stabilize, or after a predefined number of iterations.

One of the key features of the K-means algorithm is its simplicity and efficiency. It is easy to understand and implement, and it scales well to large datasets. However, it also has some limitations. For instance, it assumes that clusters are spherical and equally sized, which may not always be the case in real-world data. It is also sensitive to the initial placement of centroids and may converge to a local optimum.

Steps of the K-means Algorithm

The K-means algorithm operates in a series of iterative steps. The first step is initialization, where ‘K’ centroids are randomly placed in the data space. The next step is assignment, where each data point is assigned to the nearest centroid. The third step is update, where the position of each centroid is updated to be the mean position of all data points assigned to it. These steps are repeated until the algorithm converges.

Convergence is typically determined based on a stopping criterion, such as a maximum number of iterations or a minimum amount of change in the centroid positions. Once the algorithm has converged, the final step is to output the resulting clusters. Each data point is assigned to a cluster based on its nearest centroid, and the centroid positions represent the center of each cluster.

Distance Measures in K-means

The K-means algorithm relies on a distance measure to determine the similarity between data points and centroids. The most commonly used distance measure is the Euclidean distance, which is the straight-line distance between two points in a multi-dimensional space. However, other distance measures can also be used, such as the Manhattan distance or the Minkowski distance, depending on the nature of the data.

The choice of distance measure can significantly impact the results of the K-means algorithm. For instance, the Euclidean distance is sensitive to the scale of the data, so it is important to normalize the data before applying the K-means algorithm. On the other hand, the Manhattan distance is less sensitive to outliers, making it a better choice for data with heavy-tailed distributions.

Applications of K-means Clustering

K-means clustering is widely used in various fields due to its simplicity and efficiency. In machine learning, it is often used for exploratory data analysis, feature extraction, and anomaly detection. In data mining, it is used for pattern recognition, cluster analysis, and data summarization. In image analysis, it is used for image segmentation, object recognition, and image compression.

In bioinformatics, K-means clustering is used to classify genes or proteins based on their expression patterns. In information retrieval, it is used to group similar documents together for efficient search and retrieval. In customer segmentation, it is used to identify distinct groups of customers based on their purchasing behavior, demographics, or other characteristics.

Machine Learning and Data Mining

In the field of machine learning and data mining, K-means clustering is a powerful tool for exploratory data analysis. It can be used to identify patterns and structures in the data that may not be apparent from a cursory examination. For instance, it can reveal groups of similar observations, highlight outliers, or suggest potential features for further analysis.

K-means clustering can also be used for feature extraction, where the goal is to transform the original data into a new set of features that are more informative or easier to work with. For instance, the cluster assignments can be used as new categorical features, or the distances to the centroids can be used as new continuous features. These new features can then be used in subsequent machine learning tasks, such as classification or regression.

Image Analysis and Bioinformatics

In the field of image analysis, K-means clustering is commonly used for image segmentation, where the goal is to partition an image into distinct regions based on color, texture, or other visual characteristics. The K-means algorithm can be applied to the pixel values or other features extracted from the image, resulting in a segmented image where each region corresponds to a cluster.

In the field of bioinformatics, K-means clustering is used to classify genes or proteins based on their expression patterns. The expression levels of genes or proteins across different conditions or time points can be treated as multi-dimensional data points, and the K-means algorithm can be used to identify groups of genes or proteins that exhibit similar expression patterns. These groups can provide insights into the underlying biological processes or pathways.

Limitations and Challenges of K-means Clustering

Despite its simplicity and efficiency, the K-means algorithm has several limitations and challenges. One of the main limitations is that it assumes that clusters are spherical and equally sized, which may not always be the case in real-world data. This can lead to poor clustering results if the actual clusters are elongated, irregularly shaped, or vary significantly in size.

Another limitation of the K-means algorithm is that it is sensitive to the initial placement of centroids. If the centroids are poorly initialized, the algorithm may converge to a local optimum rather than the global optimum. This can be mitigated by using methods such as K-means++ for smarter initialization, or by running the algorithm multiple times with different initializations and choosing the best result.

Choosing the Number of Clusters

One of the main challenges in using the K-means algorithm is choosing the number of clusters ‘K’. If ‘K’ is too small, the algorithm may fail to capture the underlying structure of the data. If ‘K’ is too large, the algorithm may overfit the data and create clusters that are too specific or not meaningful.

There are several methods for choosing the number of clusters, such as the elbow method, the silhouette method, or the gap statistic. These methods involve running the K-means algorithm with different values of ‘K’ and evaluating the quality of the resulting clusters. However, these methods are not foolproof and may not always provide a clear answer, especially for complex or noisy data.

Dealing with Different Cluster Shapes and Sizes

Another challenge in using the K-means algorithm is dealing with clusters that are not spherical or equally sized. The K-means algorithm assumes that each cluster is a convex and isotropic blob, which means that it has the same shape and size in all directions. This assumption may not hold for real-world data, where clusters can be elongated, irregularly shaped, or vary in size.

There are several extensions of the K-means algorithm that can handle different cluster shapes and sizes, such as the K-medoids algorithm, the DBSCAN algorithm, or the spectral clustering algorithm. These algorithms use different distance measures or clustering criteria, allowing them to capture a wider range of cluster shapes and sizes. However, they are also more complex and computationally intensive than the basic K-means algorithm.

Conclusion

In conclusion, K-means clustering is a powerful and versatile algorithm in the field of Artificial Intelligence. It is simple to understand and implement, efficient to run, and capable of handling large datasets. It is widely used in various fields, from machine learning and data mining to image analysis and bioinformatics, for tasks such as exploratory data analysis, feature extraction, image segmentation, and gene classification.

However, the K-means algorithm also has its limitations and challenges. It assumes that clusters are spherical and equally sized, and it is sensitive to the initial placement of centroids. It also requires the user to specify the number of clusters, which can be a difficult decision in practice. Despite these challenges, the K-means algorithm remains a cornerstone of unsupervised learning and a valuable tool in the AI toolkit.