In the realm of Artificial Intelligence (AI), dimensionality reduction is a critical concept that plays a significant role in data preprocessing. It is a process that reduces the number of random variables under consideration by obtaining a set of principal variables. This technique is particularly useful in scenarios where data sets with a large number of variables are involved, as it can simplify the data without losing much information.

Dimensionality reduction is a cornerstone of machine learning and data analysis, providing a method to deal with the “curse of dimensionality,” a common problem in these fields. The curse of dimensionality refers to the exponential increase in volume associated with adding extra dimensions to Euclidean space, which can lead to problems in machine learning and statistics.

Understanding the Concept of Dimensionality

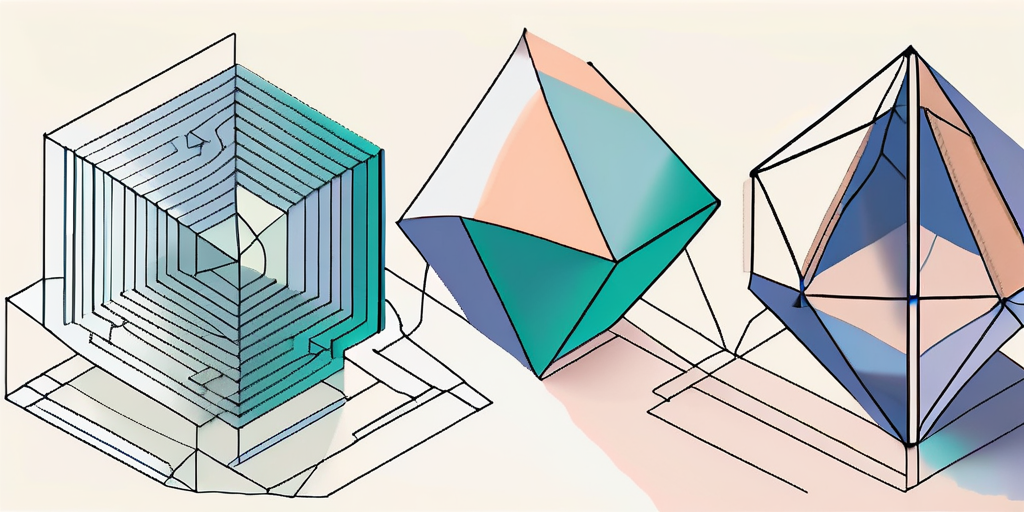

Before delving into dimensionality reduction, it’s crucial to understand what dimensionality is. In the context of data analysis and machine learning, dimensionality refers to the number of features or variables in a dataset. For example, a dataset of images might have thousands of dimensions, with each dimension representing a pixel’s intensity in the image.

High dimensionality can be a problem because it makes the data more difficult to visualize and understand. Additionally, as the number of dimensions increases, the amount of data needed to provide a representative sample of the space increases exponentially. This is known as the curse of dimensionality.

The Curse of Dimensionality

The curse of dimensionality is a term coined by Richard Bellman in 1961 to describe the problems and challenges that arise when dealing with high-dimensional spaces in fields such as statistics, machine learning, and data analysis. As the dimensionality of a dataset increases, the volume of the space increases so fast that the available data becomes sparse, making it difficult to achieve statistical significance.

Furthermore, high-dimensional datasets are more likely to contain redundant or irrelevant features, which can negatively impact the performance of machine learning algorithms. This is where dimensionality reduction techniques come into play, as they can help to eliminate these redundant features and reduce the complexity of the data.

Types of Dimensionality Reduction Techniques

There are two main types of dimensionality reduction techniques: feature selection and feature extraction. Both of these techniques aim to reduce the number of features in a dataset, but they do so in different ways.

Feature selection involves selecting a subset of the original features, while feature extraction involves creating a new set of features that are combinations of the original features. Both techniques can help to reduce the dimensionality of a dataset, but they each have their advantages and disadvantages.\

Feature Selection

Feature selection is a process that involves selecting a subset of the most relevant features from the original dataset. This process can be done manually by a domain expert who understands which features are most relevant to the problem at hand, or it can be done automatically using algorithms.

There are three main types of feature selection methods: filter methods, wrapper methods, and embedded methods. Filter methods rank features based on statistical measures and select a subset of features without involving any learning algorithms. Wrapper methods, on the other hand, involve a learning algorithm and select features based on their performance. Embedded methods combine the benefits of both filter and wrapper methods by incorporating the feature selection process into the learning algorithm itself.

Feature Extraction

Feature extraction is a process that involves creating a new set of features from the original dataset. These new features are combinations of the original features and are designed to capture as much of the relevant information as possible.

There are several different techniques for feature extraction, including Principal Component Analysis (PCA), Linear Discriminant Analysis (LDA), and t-Distributed Stochastic Neighbor Embedding (t-SNE). These techniques use different mathematical methods to create new features that capture the most important information from the original dataset.

Applications of Dimensionality Reduction

Dimensionality reduction techniques are widely used in many fields, including machine learning, data analysis, and visualization. They can help to simplify complex datasets, making them easier to understand and work with.

In machine learning, dimensionality reduction can be used to improve the performance of algorithms by reducing the complexity of the data and eliminating irrelevant features. This can lead to more accurate models and faster training times. In data analysis, dimensionality reduction can be used to identify the most important features in a dataset, helping to guide further analysis.

Machine Learning

In machine learning, dimensionality reduction is often used as a preprocessing step to improve the performance of algorithms. High-dimensional datasets can be difficult for algorithms to handle, leading to long training times and poor performance. By reducing the dimensionality of the data, algorithms can be trained more efficiently and accurately.

Dimensionality reduction can also help to prevent overfitting, a common problem in machine learning where a model learns the training data too well and performs poorly on new, unseen data. By reducing the number of features, dimensionality reduction can help to make the model more generalizable and less likely to overfit the training data.

Data Analysis

In data analysis, dimensionality reduction can be used to identify the most important features in a dataset. By reducing the dimensionality of the data, analysts can focus on the most relevant features and ignore the noise. This can help to guide further analysis and lead to more meaningful insights.

Dimensionality reduction can also be used to visualize high-dimensional data. By reducing the data to two or three dimensions, it can be plotted on a graph or chart, making it easier to understand and interpret.

Challenges and Limitations of Dimensionality Reduction

While dimensionality reduction techniques can be incredibly useful, they are not without their challenges and limitations. One of the main challenges is choosing the right technique for the task at hand. Each technique has its strengths and weaknesses, and the best choice depends on the specific characteristics of the data and the goals of the analysis.

Another challenge is interpreting the results of dimensionality reduction. The new features created by feature extraction techniques are combinations of the original features, and they can be difficult to interpret in terms of the original data. This can make it difficult to understand what the reduced data actually represents.

Choosing the Right Technique

Choosing the right dimensionality reduction technique can be a challenge. The best choice depends on the specific characteristics of the data and the goals of the analysis. For example, if the goal is to identify the most important features in the data, a feature selection technique might be the best choice. On the other hand, if the goal is to simplify the data and make it easier to work with, a feature extraction technique might be more appropriate.

It’s also important to consider the computational cost of the technique. Some techniques, like PCA, are relatively fast and can handle large datasets. Others, like t-SNE, are more computationally intensive and may not be suitable for large datasets.

Interpreting the Results

Interpreting the results of dimensionality reduction can also be a challenge. The new features created by feature extraction techniques are combinations of the original features, and they can be difficult to interpret in terms of the original data. This can make it difficult to understand what the reduced data actually represents.

Despite these challenges, dimensionality reduction techniques are a powerful tool in the field of artificial intelligence. They can help to simplify complex datasets, improve the performance of machine learning algorithms, and guide data analysis. By understanding the concepts and techniques behind dimensionality reduction, we can better harness its power and potential.